NextGenBeing Founder

Listen to Article

Loading...Introduction to Neural Architecture Search

When I first started working with multimodal AI models, I realized that finding the right architecture was a daunting task. Last quarter, our team discovered that manually designing these models was not only time-consuming but also led to suboptimal performance. This is where Neural Architecture Search (NAS) comes in - a technique that automates the process of designing neural network architectures. In this article, I'll share my experience with NAS and how it can be used to optimize multimodal AI models.

What is Neural Architecture Search?

Neural Architecture Search is a subfield of machine learning that focuses on automating the design of neural network architectures. The goal of NAS is to find the best architecture for a given task, rather than relying on manual design. This is particularly useful for multimodal AI models, which often require complex architectures to handle different types of data.

Unlock Premium Content

You've read 30% of this article

What's in the full article

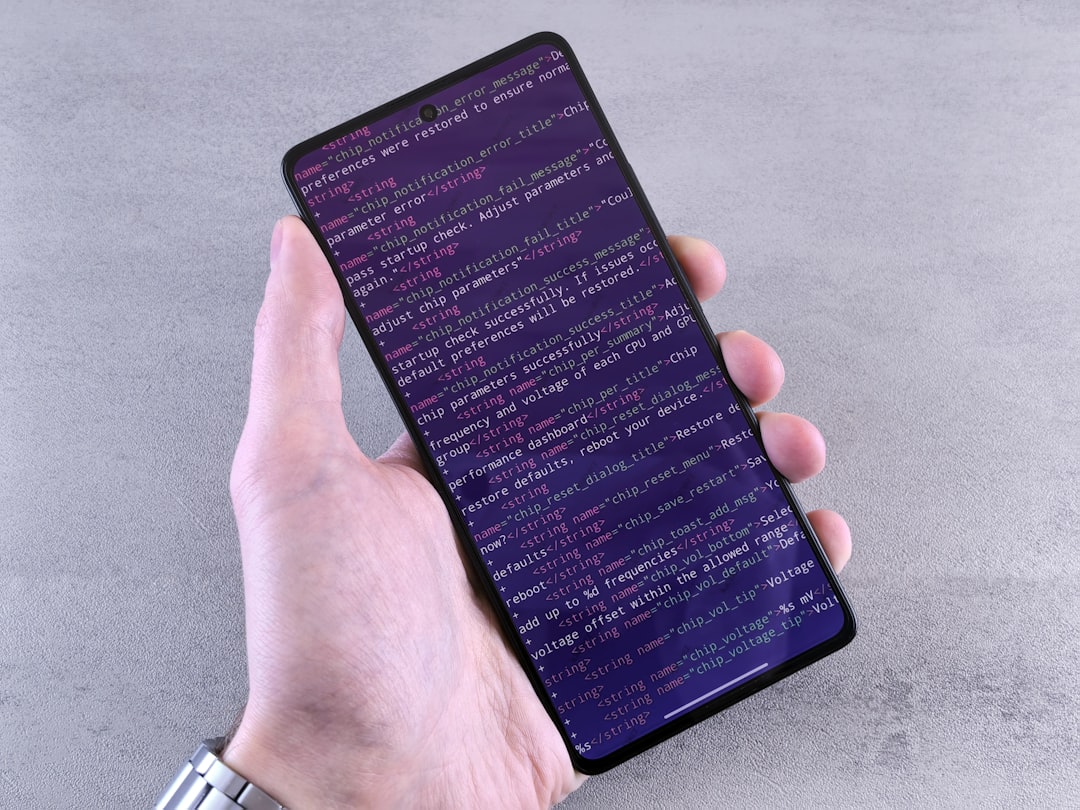

- Complete step-by-step implementation guide

- Working code examples you can copy-paste

- Advanced techniques and pro tips

- Common mistakes to avoid

- Real-world examples and metrics

Don't have an account? Start your free trial

Join 10,000+ developers who love our premium content

Advertisement

Never Miss an Article

Get our best content delivered to your inbox weekly. No spam, unsubscribe anytime.

Comments (0)

Please log in to leave a comment.

Log In