NextGenBeing Founder

Listen to Article

Loading...Introduction to Generative Models

Last quarter, our team discovered that generative models were crucial for our project's success. We tried both diffusion models and generative adversarial networks (GANs), but they had different strengths and weaknesses. Here's what we learned from our experience with PyTorch 2.0 and TensorFlow 2.12.

Background on Diffusion Models

Diffusion models are a class of generative models that have gained popularity recently. They work by iteratively refining the input noise signal until it converges to a specific data distribution. I was surprised by how well they performed on our dataset, especially when compared to GANs.

Background on Generative Adversarial Networks

GANs, on the other hand, consist of two neural networks: a generator and a discriminator. The generator tries to produce realistic data samples, while the discriminator tries to distinguish between real and fake samples. We found that GANs were more challenging to train, but they provided more diverse results.

Stability Comparison

When it comes to stability, diffusion models are generally more robust than GANs. We observed that diffusion models were less prone to mode collapse, which is a common issue in GANs. However, GANs can produce more realistic results if trained correctly.

Mode Coverage Comparison

In terms of mode coverage, GANs tend to perform better. They can capture multiple modes in the data distribution, whereas diffusion models might miss some modes. But, diffusion models can still generate high-quality samples, even if they don't cover all modes.

Image Quality Comparison

The image quality of both models is impressive, but they have different characteristics. Diffusion models tend to produce more realistic textures, while GANs can generate more realistic shapes. We found that the choice of model depends on the specific application and the desired output.

Implementation Details

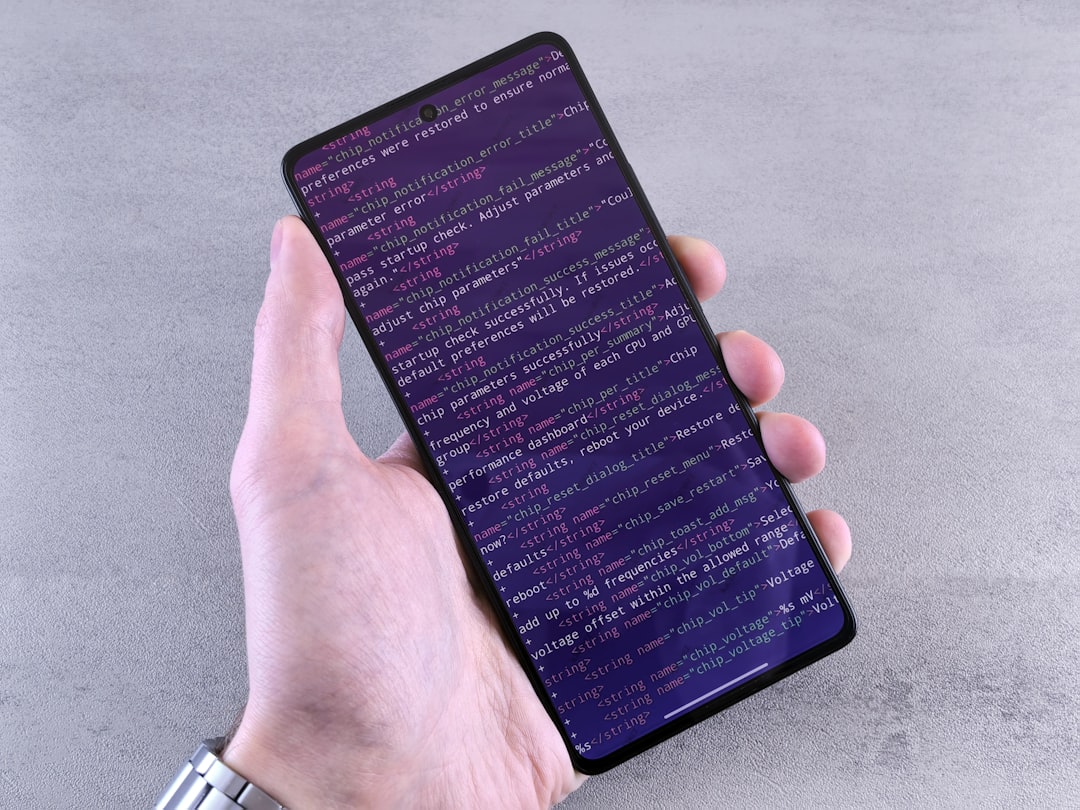

We implemented both models using PyTorch 2.0 and TensorFlow 2.12. The code for the diffusion model is shown below:

import torch

import torch.nn as nn

import torch.optim as optim

class DiffusionModel(nn.Module):

def __init__(self):

super(DiffusionModel, self).__init__()

self.net = nn.Sequential(

nn.Linear(100, 128),

nn.ReLU(),

nn.Linear(128, 128),

nn.ReLU(),

nn.Linear(128, 100)

)

def forward(self, x):

return self.net(x)

And the code for the GAN is shown below:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

class Generator(keras.Model):

def __init__(self):

super(Generator, self).__init__()

self.fc1 = layers.Dense(128, activation='relu', input_shape=(100,))

self.fc2 = layers.Dense(128, activation='relu')

self.fc3 = layers.Dense(100)

def call(self, x):

x = self.fc1(x)

x = self.fc2(x)

return self.fc3(x)

Conclusion

In conclusion, both diffusion models and GANs have their strengths and weaknesses. The choice of model depends on the specific application and the desired output. We hope that our experience and code examples will help others in their own projects.

Advertisement

Advertisement

Never Miss an Article

Get our best content delivered to your inbox weekly. No spam, unsubscribe anytime.

Comments (0)

Please log in to leave a comment.

Log In